Speed and power: fine-tuning distilled transformers models

Thu 25 February 2021

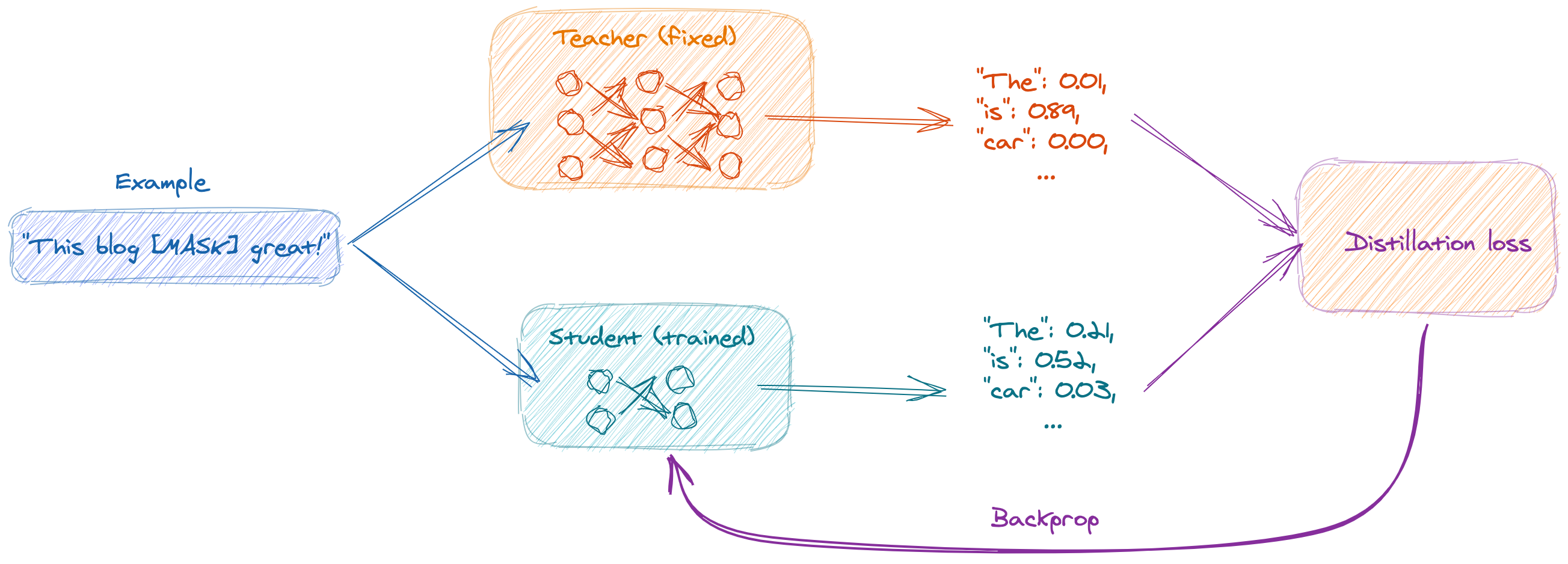

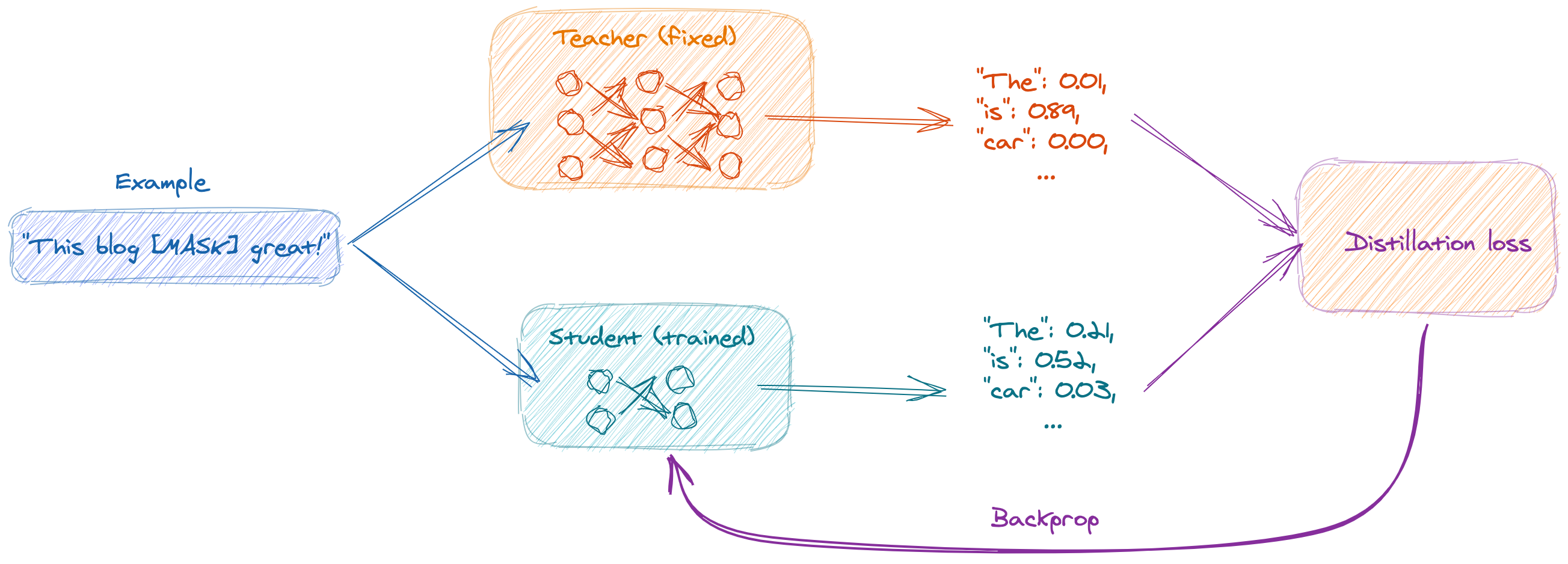

Leaner and faster Transformers with distillation. Fine-tuning BERT-tiny in a couple of minutes with PyTorch.

Thu 25 February 2021

Leaner and faster Transformers with distillation. Fine-tuning BERT-tiny in a couple of minutes with PyTorch.

Fri 29 January 2021

Fine-tuning DistillBERT to tag toxic comments on Wikipedia, using TensorFlow and Hugging Face's transformers library.